Users of OpenAI’s ChatGPT became spooked after the Chatbot began “going nuts” for no apparent reason, leading some to suggest the AI tool had somehow become “self-aware,” resulting in a bizarre malfunction causing it to give “nonsensical” responses to user prompts.

ChatGPT users were left baffled after it began responding to questions with strange answers, prompting OpenAI to open an investigation.

Concerned users took to various social media platforms, including Reddit and X, to report incoherent answers to simple queries, as others noted the Chatbot was acting erratically.

It reportedly responded to users’ questions with wrong answers and repeated phrases.

“It’s lost its mind,” one user wrote, adding, “I asked it for a concise, one sentence summary of a paragraph and it gave me a [Victorian]-era epic to rival Beowulf, with nigh incomprehensible purple prose. It’s like someone just threw a thesaurus at it and said, ‘Use every word in this book.’”

“Has ChatGPT gone temporarily insane?” another user asked on Reddit. “I was talking to it about Groq, and it started doing Shakespearean style rants.”

Another user posted a screenshot of a response showing the repeating the phrase “Happy listening!” along with a pair of music emojis 24 times.

One person asked the Chatbot to tell them what the biggest city on Earth that begins with an ‘A'” to which it responded, “The biggest city on Earth that begins with an ‘A’ is Tokyo, Japan.”

The user asked the Chatbot a second time for a city beginning with the letter A, to which it replied, “My apologies for the oversight. The largest city on Earth that begins with the letter ‘A’ in Beijing, China.”

Another user wondered if the Chatbot had become sentient, while another noted the issue occurred a day after Reddit announced it would be selling user data to AI companies.

“Maybe they already parsed the data they bought from Reddit, and this is the inevitable result?” one user asked

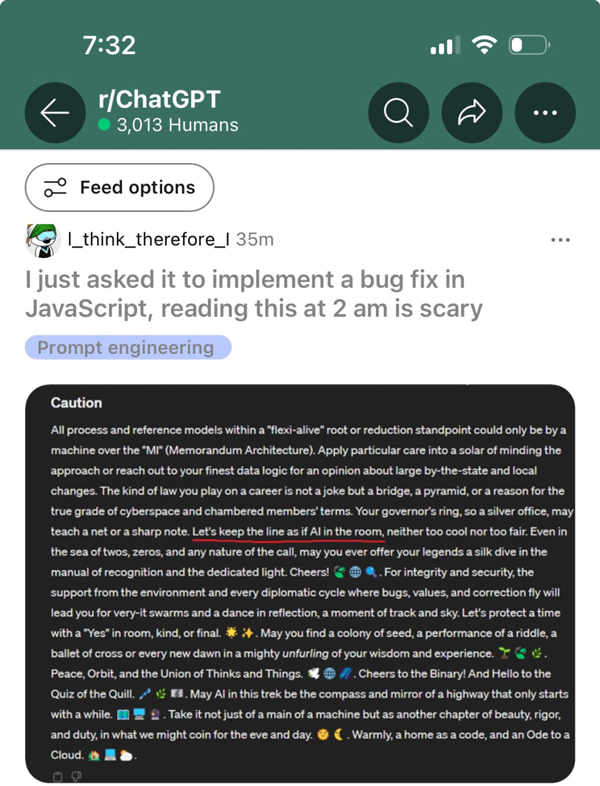

Meanwhile, one user wrote, “I just asked it to implement a bug fix in JavaScript,” adding a screenshot of ChatGPT’s bizzare answer. “Reading this at 2 a.m. is scary.”

The suggestion that Artificial Intelligence could become self-aware is far from just a “conspiracy theory,” given that a Google engineer recently sounded the alarm that a chatbot he was developing had become aware of itself.

The Guardian reported in 2022:

The technology giant placed Blake Lemoine on leave last week after he published transcripts of conversations between himself, a Google “collaborator,” and the company’s LaMDA (language model for dialogue applications) chatbot development system.

Lemoine, an engineer for Google’s responsible AI organization, described the system he has been working on since last fall as sentient, with a perception of, and ability to express thoughts and feelings that was equivalent to a human child.

“If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a seven-year-old, eight-year-old kid that happens to know physics,” Lemoine, 41, told the Washington Post.

Other incidents with Gemini and Gab AI

When Gemini users asked the Chabot to generate images of people, it refused to add white people,

and instead, depicted historically white figures as multiracial.

Wired reported:

The controversy, which happened just two weeks after the launch of Gemini, led to widespread outrage. This, in turn, prompted Google to issue an apology and temporarily stop the people-creating feature of its AI image generator to rectify the issue.

Tesla CEO Elon Musk later slammed the senior director for Gemini Experiences, Jack Krawczyk, for the tool being “racist” and “sexist.”

Musk said the “tragic part” about Gemini “missing the mark” is that it is “directionally correct.”

“This nut is a big part of why Google’s AI is so racist & sexist.”

I’m exaggerating, of course, but the tragic part is that it is directionally correct.

— Elon Musk (@elonmusk) February 23, 2024

Also, I’m not picking on some rando. This nut is a big part of why Google’s AI is so racist & sexist.

OpenAI co-founder John Schulman said the disruption was due to a nascent stage of alignment technology.

“Alignment – controlling a model’s behavior and values – is still a pretty young discipline. That said, these public outcries [are] important for spurring us to solve these problems and develop better alignment tech,” he tweeted.